Meta Lawsuits - Facebook & Instagram

An increasing number of children and teens are experiencing harm caused by Meta platforms Facebook and Instagram. Many families have started to file lawsuits against Meta to pursue damages for the harms these social media platforms have caused children and teens.

Contact our law firm today for a free evaluation of your child or teen’s case.

Written and edited by our team of expert legal content writers and reviewed and approved by Attorney Matthew Bergman

Written and edited by our team of expert legal content writers and reviewed and approved by Attorney Matthew Bergman

- Content last updated on:

- June 12, 2025

Written and edited by our team of expert legal content writers and reviewed and approved by

- Content last updated on:

- June 12, 2025

- Updates on Lawsuits Against Facebook and Instagram

- Why Are People Filing Lawsuits Against Meta?

- Who Can File a Lawsuit Against Meta?

- What Damages Are Available in a Lawsuit Against Meta?

- How Do You File a Social Media Harm Lawsuit Against Meta?

- How Has Meta Acted Negligently to Cause Harm?

- Experienced Product Liability and Mass Tort Attorneys Holding Meta Accountable

May 2025 Updates On Lawsuits Against Meta, Facebook, and Instagram

Meta Faces Ongoing Legal Challenges Over Instagram Addiction Lawsuit

Meta Platforms Inc. must face a lawsuit filed in 2023 by the Massachusetts Attorney General, alleging that Instagram’s features, like infinite scrolling and autoplay, are intentionally designed to addict children and harm their mental health. In October 2024, a Massachusetts appeals court rejected Meta’s attempt to dismiss the case, finding that the lawsuit targets Meta’s conduct, not user content. This lawsuit is part of a broader multidistrict litigation (MDL) filed in 2022 against Meta, Snap, TikTok, and YouTube, accusing them of designing platforms that encourage addictive behavior among youth. As of April 2025, the MDL includes over 1,700 cases. Meta denies the allegations and says it remains committed to user safety.

Instagram Flooded with Gore and Explicit Content—Meta’s Apology Falls Short

On February 27, Instagram users were flooded with graphic and violent content, including sexually explicit material, gore, and murder videos, even if they had settings to block such content. The disturbing videos appeared in Reels recommendations, reaching minors and users who did not follow the creators. Reports on Reddit claimed that child pornography was also visible. Meta apologized, calling it an “error,” but did not clarify the scale of the issue. Major news outlets, including Bloomberg, WSJ, and The Guardian, reported on the incident, highlighting the widespread exposure and the platform’s failure to prevent it.

Mark Zuckerberg Avoids Personal Liability in Meta Addiction Lawsuits

A federal judge rejected a second attempt to hold Mark Zuckerberg, CEO of Meta Platforms Inc., individually liable in two dozen lawsuits that accuse Meta and other social media companies of knowingly addicting children to their products. U.S. District Judge Yvonne Gonzalez Rogers, who is overseeing social media addiction multi-district litigation (MDL No. 3047), dismissed Zuckerberg as an individual defendant after ruling that a revised complaint still did not meet the legal standard for corporate officer liability. The lawsuits alleged that Meta employees warned Zuckerberg that Facebook and Instagram were not safe for children, but Zuckerberg ignored the findings.

On October 15, a California federal judge Yvonne Gonzalez Rogers ruled that Facebook and Instagram parent company Meta must continue to face social media addiction lawsuits from more than 30 U.S. states that claim its social media platforms harm children and teenagers through addictive algorithm designs. While the judge agreed that Section 230 of the Communications Decency Act of 1996 shields Meta from some of the states’ claims, she also ruled that the states presented enough evidence of allegedly misleading statements Meta made to proceed with most claims in their cases. The decision will allow the states to collect more evidence and potentially proceed to trial.

The ruling affected two lawsuits against Meta that accuse the company of fueling anxiety, depression, and body image issues in youth by implementing harmful and addictive algorithms into their platforms. Additionally, the suits are two of many to accuse Meta of failing to adequately warn children and their parents about the risks of using its platforms. Lawsuits against the company and other social media giants aim to hold the companies accountable for their business practices and seek financial compensation for victims.

Why Are People Filing Lawsuits Against Meta?

Parents and guardians are filing lawsuits against Meta because of the mental and physical harm its platforms, Facebook and Instagram, have caused younger users. Studies show Facebook and Instagram have led to a rise in social media addiction in teens, leading to mental health issues and harm, including:

While experts have warned about the mental effects of social media for many years, the issue got more attention when Frances Haugen, a former Facebook manager, brought internal company documents to light that exposed Meta’s awareness of the harm it causes its users.

"The algorithms [Metas] are explicitly designed to maximize user engagement, not give kids what they want to see but what they can't look away from. It subjects them to material that they aren't looking for, it makes them hate the way they look, hate their bodies, it encourages them to take risky behavior..."

Matthew Bergman on Voice of America News - December 11th, 2023

One such document indicated that Meta employees knew using Instagram led to body image issues for teens but ignored the dangers. Meanwhile, the families of those harmed by Instagram and Facebook have started pursuing lawsuits to hold Meta accountable. Following Haugen’s revelations, more and more families have come forward with allegations against the social media giant.

Our Facebook & Meta Mental Health Lawsuits

To date, our law firm has filed hundreds of cases against Meta, representing over a thousand victims that have suffered harms because of Facebook and Instagram:

- July 24, 2024: Jessica Chittim and Samuel Chittim, residents of Camano Island, Washington, have filed a Master Short-Form Complaint (Case No. 4:22-MD-03047-YGR) against Meta Platforms, Inc. (formerly Facebook, Inc.) and Instagram, LLC in the United States District Court for the Northern District of California. They allege severe personal injuries including addiction, depression, anxiety, and self-harm behaviors resulting from their use of these social media platforms, spanning from approximately 2014 to 2024.

- July 23, 2024: In Case No. 4:22-MD-03047-YGR, filed in the United States District Court for the Northern District of California, Brandy Ball and Tyran Haulcy of Gowen, Michigan, representing the late Tahrique Haulcy, allege against Meta Platforms, Inc. (formerly Facebook, Inc.) and Instagram, LLC, claiming damages due to addiction, depression, anxiety, and self-harm behaviors resulting from their use of these social media platforms, including harms from viral challenges.

- July 23, 2024: In Case No. 4:22-MD-03047-YGR, Jeff Lucas Greedy and Ciguiri (Hannah) Greedy of Liberty Hill, Texas, have filed a Master Short-Form Complaint against Meta Platforms, Inc. (formerly Facebook, Inc.) and Instagram, LLC in the United States District Court for the Northern District of California. They allege these social media platforms caused addiction, depression, anxiety, and self-harm behaviors, including harms from viral challenges, during use.

- July 23, 2024: Our firm filed “Danley v. Meta Platforms,” as part of “In Re: Social Media Adolescent Addiction/Personal Injury Products Liability Litigation” (Case No. 4:22-md-03047-YGR), alleging that Meta Platforms (Facebook and Instagram) caused significant harm to adolescents, including addiction and mental health issues. The lawsuit, in the Northern District of California, demands a jury trial to address these damages.

- October 5, 2022: C.U. and S.U. v. Meta Platforms Inc.: This Instagram harm lawsuit alleges that Meta and Snapchat caused a girl to become addicted to their products, resulting in severe mental health issues and suicide attempts. She alleges that Snapchat enabled, facilitated, and profited from her abuse.

- August 4, 2022: Jennifer Mitchell v. Meta Platforms Inc.: This lawsuit alleges Meta is responsible for the wrongful death of 16-year-old Ian James Ezquerra of New Port Richey, Florida.

- June 7, 2022: Alexis, Kathleen, and Jeffrey Spence v. Meta Platforms Inc.: Alexis Spence and her parents, Kathleen and Jeffrey Spence, filed this personal injury lawsuit against Meta. This lawsuit alleges that Instagram is responsible for Alexis’ social media addiction, beginning when she was just 11 years old, resulting in anxiety, depression, self-harm, an eating disorder, and suicidal thoughts.

- April 12, 2022: Donna Dawley vs. Meta Platforms Inc.: This wrongful death lawsuit against Meta seeks to hold the platform accountable for the suicide death of Christopher Dawley, 17, of Salem, Wisconsin, on January 4, 2015.

- January 20, 2021: Brittney Doffing and M.D. vs. Meta Platforms Inc.: This product liability lawsuit seeks to hold Meta accountable for the harm resulting from the defective design and unreasonably dangerous features available to minors. It also alleges sexual discrimination for using gender information to target female users with content and accounts specifically harmful to them.

- January 20, 2021: Rodriguez v. Meta Platforms Inc.: This wrongful death lawsuit seeks to hold Meta accountable for the 2021 suicide of 11-year-old Selena Rodriguez of Enfield, Connecticut. The lawsuit alleges Meta defectively designed its products with unreasonably dangerous features.

Our attorneys apply principles of product liability and tort law to force social media companies to stop putting profits over consumer safety and design safer platforms that protect users from foreseeable harm.

Who Can File a Lawsuit Against Meta?

Parents may be able to file a lawsuit against Facebook and Instagram on behalf of their children or teens if they were born after the year 2000 and have been physically and mentally harmed as a result of using the platforms. Common examples of harm in these cases include:

- Suicide or suicide attempts

- Depression

- Anxiety

- Eating disorders

- Loneliness

- Sleep disorders

Some argue that many teens would struggle with these same issues even if they weren’t on social media platforms. However, studies continue to show that social media, such as Meta platforms, contributes to low self-esteem, poor body image, fear of missing out, and mental and emotional health challenges that lead to worsening mental states.

Contact one of our lawyers today for a free case evaluation to determine your eligibility to file a harm lawsuit against Meta.

What Damages Are Available in a Lawsuit Against Meta?

In these lawsuits, plaintiffs may be eligible to receive different types of damages, including the following:

- Medical treatment for mental or physical health issues

- Therapy costs to treat mental or emotional disorders

- Pain and suffering the victim suffered

- Treatment and recovery costs

- Lost income related to disorders or injuries

- Punitive damages

Punitive damages are monetary damages designed to punish the defendant and deter future instances of the same act or behavior. Although rare, they could apply if the defendant acted willfully or wantonly when causing the harm.

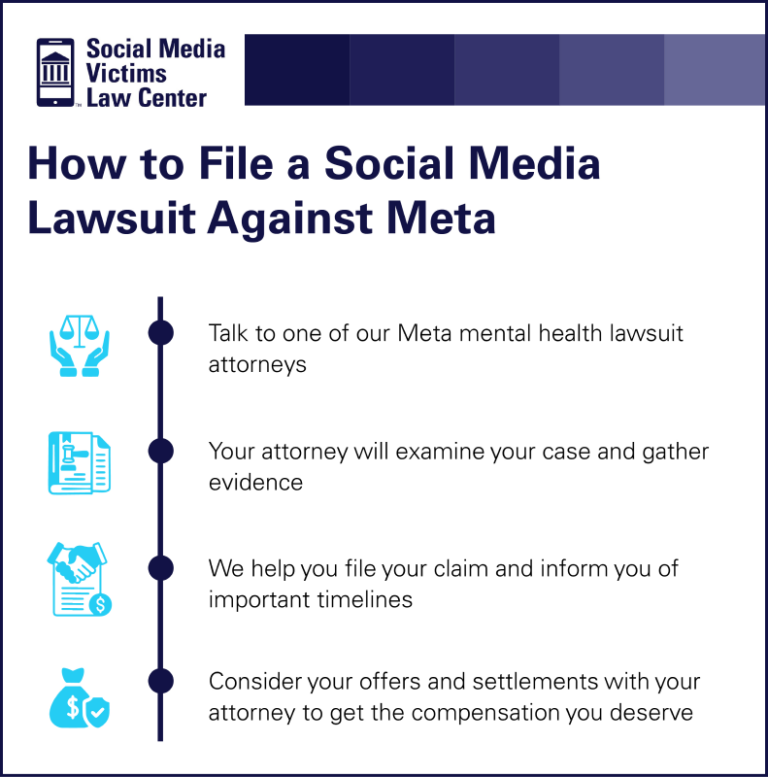

How Do You File a Social Media Harm Lawsuit Against Meta?

When your teen or child is experiencing harm because of Meta’s social media platforms, follow these steps to pursue a Facebook mental health lawsuit:

1. Talk to Our Lawyers

Your first step is to contact our experienced social media lawyers who can explain your rights, examine your case, and guide you through the legal process.

2. Examine the Case and Investigate

Our lawyers will review your child’s case to assess the damage and determine the cause. This process will involve a thorough investigation, research, and gathering evidence.

3. Start the Paperwork and Filing

There are several hoops to jump through when you’re ready to file your Facebook or Instagram lawsuit for mental health. Our firm can help guide you on how to prepare and file paperwork related to the lawsuit.

4. Pay Attention to Dates and Deadlines

Our team will help keep you up to date and informed on the deadlines you must follow, including important filing deadlines.

5. Consider Offers and Settlements

These lawsuits are still developing and settlement amounts will become more clear as the lawsuits against Meta continue in 2025.

6. Get the Compensation You Deserve

As our client, our law firms goal is to seek justice on behalf of your child or teen and get you the compensation you rightfully deserve.

How Has Meta Acted Negligently to Cause Harm?

Lawsuits allege Meta chose to put profits over safety and neglected to address serious issues with their products and marketing tactics:

- Meta’s products are defective by design.

- Meta’s products are marketed without proper warnings or instructions.

- Meta displayed negligence in marketing harmful products to youths without conducting research or warning the public.

Meta’s Products Are Defective by Design

Meta’s design defects are especially damaging because no reasonable parent or user would assume that Meta would intentionally create a harmful product. However, the evidence proves otherwise:

- Meta’s products lack safeguards that could prevent minors from exposure to harmful and exploitative content, even though such features could be easily included.

- Meta allows users under 13 to access its platforms in violation of federal laws and without parental consent by failing to require age or identity authentication.

- Meta’s products lack parental controls and are intentionally designed to thwart parental monitoring and restriction.

- Meta’s products intentionally recommend and promote harmful and exploitative content to young users.

- Meta’s products have no mechanisms to report sex offenders to law enforcement or allow users or parents to do the same.

- Meta has intentionally designed addictive products that exploit the incomplete brain development of youths and manipulate the brain reward system.

- Meta platforms lack a notification system for parents when teens and children engage in problematic use, even though this can easily be detected by the platforms’ algorithms.

Meta Has Failed to Warn Users and Parents About the Known Dangers of Their Products

A lack of warnings prevents Meta’s users from safely accessing the product because they cannot reasonably be expected to know about the product’s dangers:

- The potential for addiction and its effects

- The risk of sleep deprivation and its effects

- The lack of mechanisms to report minors’ screen time to their parents

Meta Has Demonstrated Negligence

Meta has neglected its duty to provide users with safe products as follows:

- Meta has neglected to allow independent testing of its products’ effects or to conduct adequate testing of its own.

- Meta has failed to provide ample warnings to users and their parents about the known dangers of using their products.

- Meta has neglected to investigate and restrict the use of Meta products by sexual predators.

- Meta has neglected to provide parents with the necessary tools to ensure its platforms are used in a safe and limited manner.

Experienced Product Liability and Mass Tort Lawyers Holding Facebook and Instagram Legally Accountable

When your child or teen has experienced mental or physical harm related to their use of Facebook and Instagram, it’s time to talk to a lawyer. Our founder, Attorney Matt Bergman, and our team of social media lawyers at the Social Media Victims Law Center have over 100 years of combined experience handling product liability cases and are on a mission to help families seek justice against social media companies.

If your child has experienced mental or physical harm from using one of Meta’s products, contact our law firm today for a free case evaluation: (206) 741-4862.

Meta Mental Health Lawsuit FAQs

What Is Meta?

First introduced in 2004, Facebook was initially available to college students with an official school email address. Tech mogul Mark Zuckerberg started the networking website as a Harvard University student.

After Facebook took over Instagram—the social media platform focused on sharing photographs and videos—the company changed its name to Meta in 2021. Facebook is the most-used social media platform globally, with nearly 3 billion active monthly users, and 70 percent of internet users stay active on at least one Meta platform.

Meta Lawsuit Case Review

Our law firm specializes in lawsuits against Facebook and Instagram on behalf of children and teens.